We design systems around the size of delays that are expected. You may have seen the popular table “latency numbers every programmer should know” which lists some delays that are significant in technology systems we build.

Teams are systems too. Delays in operations that teams need to perform regularly are significant to their effectiveness. We should know what they are.

Ssh to a server on the other side of the world and you will feel frustration; delay in the feedback loop from keypress to that character displayed on the screen.

Here’s some important feedback loops for a team, with feasible delays. I’d consider these delays tolerable by a team doing their best work (in contexts I’ve worked in). Some teams can do better, lots do worse.

| Operation | Delay |

| Run unit tests for the code you’re working on | < 100 Milliseconds |

| Run all unit tests in the codebase | < 20 Seconds |

| Run integration tests | < 2 Minutes |

| From pushing a commit to live in production | < 5 Minutes |

| Breakage to Paging Oncall | per SLO/Error Budget |

| Team Feedback | < 2 Hours |

| Customer Feedback | < 1 Week |

| Commercial Bet Feedback | < 1 Quarter |

What are the equivalent feedback mechanisms for your team? How long do they take? How do they influence your work?

Feedback Delays Matter

They represent how quickly we can learn. Keeping the delays as low as the table above means we can get feedback as fast as we have made any meaningful progress. Our tools/system do not hold us back.

Feedback can be synchronous if you keep them this fast. You can wait for feedback and immediately use it to inform your next steps. This helps avoid the costs of context switching.

With fast feedback loops we run tests, and fix broken behaviour. We integrate our changes and update our design to incoporate a colleague’s refactoring.

Fast is deploying to production and immediately addressing the performance degradation we observe. It’s rolling out a feature to 1% of users and immediately addressing errors some of them see.

With slow feedback loops we run tests and respond to some emails while they run, investigate another bug, come back and view the test results later. At this point we struggle to build a mental model to understand the errors. Eventually we’ll fix them and then spend the rest of the afternoon trying to resolve conflicts with a branch containing a week’s changes that a teammate just merged.

With slow deploys you might have to schedule a change to production. Risking being surprised by errors reported later that week, when it has finally gone live, asynchronously. Meanwhile users have been experiencing problems for hours.

Losing Twice

As feedback delays increase, we lose twice:

a) We waste more time waiting for these operations (or worse—incur context switching costs as we fill the time waiting)

b) We are incentivised to seek feedback less often, since it is costly to do so. Thereby wasting more time & effort going in the wrong direction.

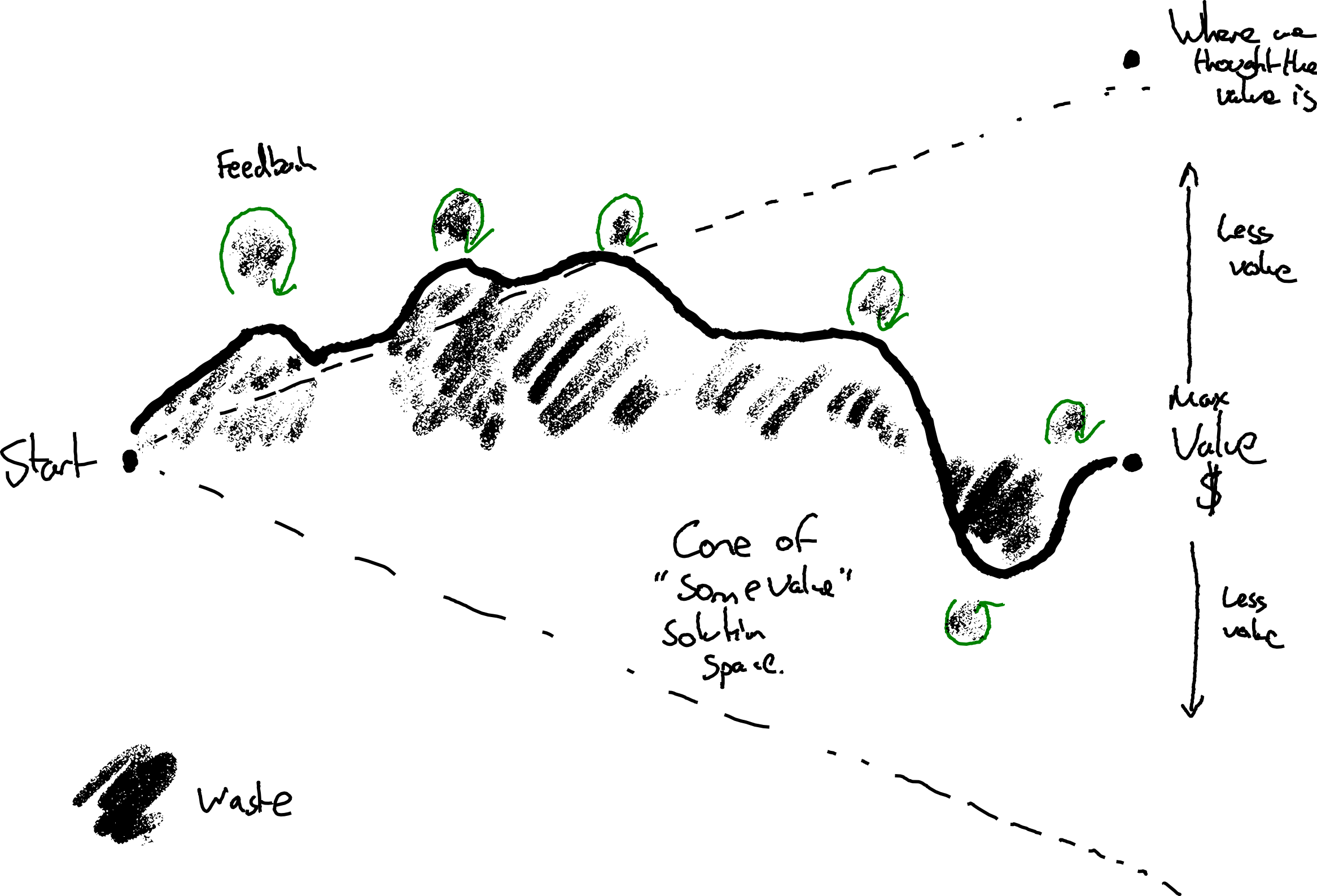

I picture this as a meandering path towards the most value. Value often isn’t where we thought it was at the start. Nor is the route to it often what we envisioned at the start.

We waste time waiting for feedback. We waste time by following our circuitous route. Feedback opportunities can bring us closer to the ideal line.

When feedback is slow it’s like setting piles of money on fire. Investment in reducing feedback delays often pays off surprisingly quickly—even if it means pausing forward progress while you attend to it.

This pattern of going in slightly the wrong direction then correcting repeats at various granularities of change. From TDD, to doing (not having) continuous integration. From continuous deployment to testing in production. From customers in the team, to team visibility of financial results.

Variable delays are even worse

In recent times you may have experienced the challenge of having conversations over video links with significant delays. This is even harder when the delay is variable. It’s hard to avoid talking over each other.

Similarly, it’s pretty bad if we know it’s going to take all day to deploy a change to production. But it’s so worse if we think we can do it in 10 minutes, when it actually ends up taking all day. Flaky deployment checks, environment problems, change conflicts create unpredictable delays.

It’s hard to get anything done when we don’t know what to expect. Like trying to hold a video conversation with someone on a train that’s passing through the occasional tunnel.

Measure what Matters

The time it takes for key types of feedback can be a useful lead indicator on the impact a team can have over the longer term. If delays in your team are important to you why not measure them and see if they’re getting better or worse over time? This doesn’t have to be heavyweight.

How about adding a timer to your deploy process and graphing the time it takes from start to production over time? If you don’t have enough datapoints to plot deploy delay over time that probably tells you something ;)

Or what about a physical/virtual wall for waste. Add to a tally or add a card every time you have wasted 5 mins waiting. Make it visible. How big did the tally get each week?

What do the measurements tell you? If you stopped all feature work for a week and instead halved your lead time to production, how soon would it pay off?

Would you hit your quarterly goals more easily if you stopped sprinting and first removed the concrete blocks strapped to your feet?

What’s your experience?

Every team has a different context. Different sorts of feedback loops will be more or less important to different teams. What’s important enough for your team to measure? What’s more important than I’ve listed here?

What is difficult to keep fast? What gets in the way? What is so slow in your process that synchronous feedback seems like an unattainable dream?